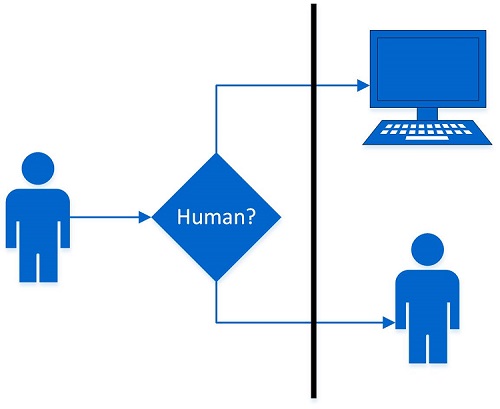

The Turing test is an assessment of a machine's ability to exhibit intelligent behavior, with that behavior referenced against that of a human. The test, and hence the name, results from a really interesting paper written Alan Turing wrote in 1950 titled, Computing Machinery and Intelligence. If you'd like to download a copy, click here. He proposed that a human evaluator should remotely judge natural language conversations between both a human and machine, knowing that there is one of each, and then have to distinguish which conversation belongs to the human. If the human can't consistently determine, then the machine is to have passed the test.

There is yet to be a machine to consistently pass the test, though some have claimed to have passed and some have tested in limited circumstances. While machines will eventually pass, it isn't likely that it'll have human level intelligence. Unfortunately, most of the attempts lately at passing the test are more from trying to do tricks and still relying on canned responses, than resulting from attempts to actually develop the capacity to mimic human thought processes.

While the Turing test has been a foundation of artificial intelligence research for more than fifty years, it certainly has numerous weaknesses and is but one test to ultimately determine whether the machine has true intelligence. One significant weakness is that it is heavily dependent upon the intelligence of both the human evaluator and human being evaluated. If either human does strange behavior within the conversation, they may mistake it for the machine having issues comprehending the conversation.

An alternate test to the Turing test is from a paper in 1980 by John Searle, titled "Minds, Brains, and Programs" proposed a though experiment called the "Chinese Room". The paper makes the argument that the only way a machine can have human level intelligence is if truly understands what it is saying, as it could pass notes with symbols back and forth all day, but if it doesn't understand them, then it really isn't "thinking".

Eventually, machine intelligence will far surpass human intelligence. It is simply a matter of time.